Over the past few years, I’ve witnessed a significant shift in how applications are developed and deployed, leading me to explore the fascinating world of serverless architecture. In this ultimate guide for beginners, I’ll break down the core concepts, benefits, and challenges of serverless computing so you can understand how it can streamline your development process and enhance your project’s scalability. Whether you are new to cloud technologies or looking to refine your existing knowledge, this guide will equip you with practical insights to harness the power of serverless architecture effectively.

Decoding Serverless: What It Truly Means

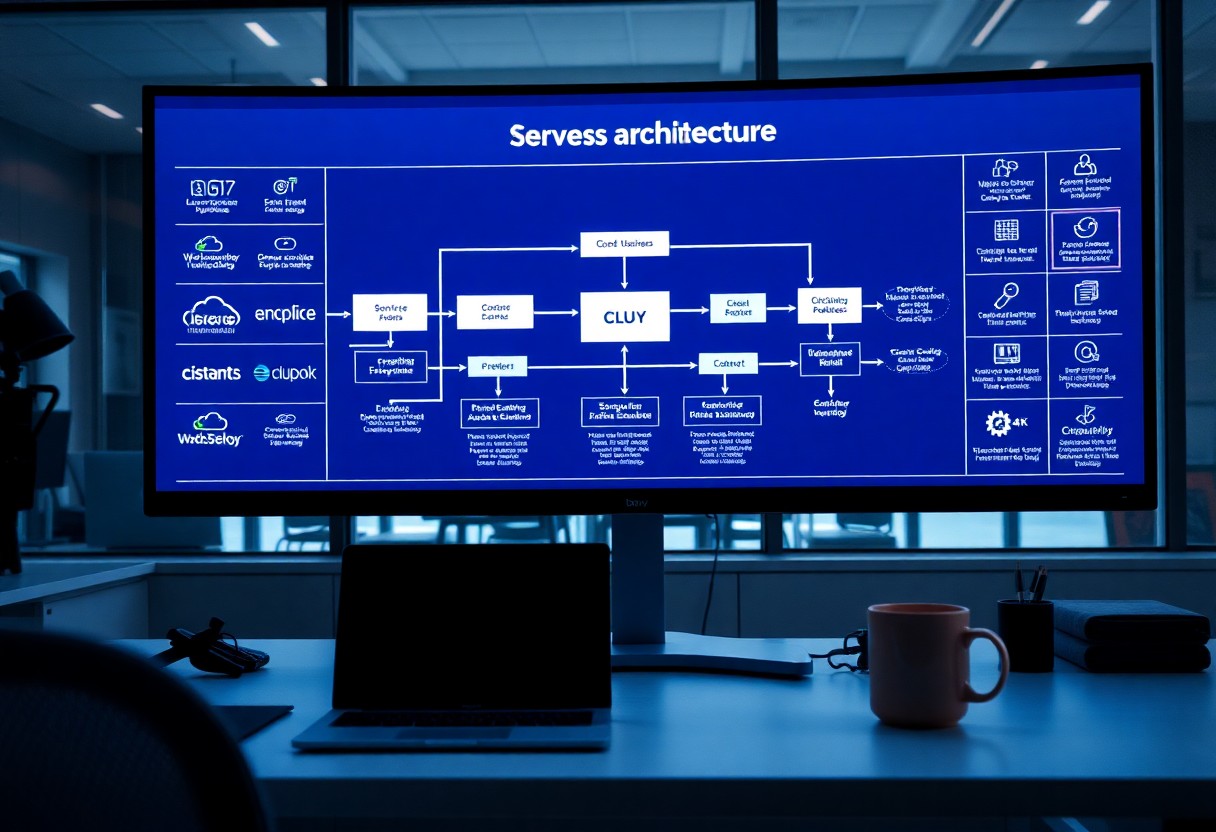

Serverless architecture shifts the focus from traditional server management to streamlined, event-driven computing. You can deploy applications without managing infrastructure, allowing you to concentrate on writing code and delivering value. With serverless, scaling happens automatically in response to demand, eliminating the headaches and resource drain associated with over-provisioning or under-utilization. By leveraging this paradigm, developers can expedite deployment cycles and enhance agility, paving the way for rapid innovation.

The Principles Behind Serverless Computing

The core principles of serverless computing are simplicity, flexibility, and cost-efficiency. You pay for the execution time of your code rather than pre-allocating resources, which translates to significant cost savings. The model encourages a microservices architecture, where applications are broken into manageable, independently deployable functions. This design not only allows you to innovate faster but also promotes reliability as failures are isolated to individual functions rather than impacting the entire application.

The Role of Cloud Providers and Function as a Service (FaaS)

Cloud providers play a pivotal role in serverless ecosystems by offering Function as a Service (FaaS) solutions. You can deploy functions without worrying about servers, allowing cloud vendors like AWS with Lambda, Google Cloud Functions, and Azure Functions to handle the orchestration of resources and scaling. This eliminates infrastructure complexities, enabling you to focus on your business logic while leveraging the cloud’s inherent scalability and reliability.

Expanding on this, FaaS abstracts away the infrastructure layer entirely, allowing you to write code that responds to events such as API calls or file uploads. For example, with AWS Lambda, you can configure a function to trigger when an S3 bucket receives a new file, automatically processing it without needing to manage dedicated servers. The flexibility of FaaS means you can deploy updates or rollbacks on individual functions without affecting the entire application, leading to faster iterations and innovation cycles.

Economic Upsides: The Cost Structure of Going Serverless

Transitioning to a serverless architecture can significantly enhance your cost-effectiveness by optimizing resource allocation. Instead of paying for a fixed amount of computing power, you’re only charged for the actual execution time of your code. This on-demand model encourages efficiency and allows budget adjustments in alignment with project needs, making it attractive for startups and large enterprises alike.

Pay-As-You-Go Pricing Models

One of the most appealing aspects of serverless architecture is the pay-as-you-go pricing model. You only pay for the resources you consume, eliminating the overhead costs associated with idle servers. This model is especially advantageous during variable workload scenarios, where you can scale according to demand without incurring unnecessary expenses.

Analyzing Total Cost of Ownership vs. Traditional Architectures

Evaluating the total cost of ownership (TCO) when adopting serverless architecture reveals several financial advantages over traditional models. While upfront costs may seem lower with traditional setups, ongoing management, maintenance, and scalability expenses can compound over time, often surpassing the pay-as-you-go costs associated with serverless solutions.

Diving into the total cost of ownership comparison shows that serverless architectures cut down on operational overhead, such as server upkeep and software updates, which can bog down traditional infrastructures. For instance, startups may find a TCO reduction of up to 30% when migrating to serverless, as they avoid the capital investments in hardware and reduce staff efforts dedicated to server management. Large corporations with fluctuating workloads can harness similar benefits, which translate into a more streamlined budget and scalable resource allocation. An analysis of real-world implementations indicates that organizations realize quicker ROI, affirming the financial viability of adopting serverless solutions.

Performance Elevated: How Serverless Applications Scale

Serverless applications have a unique advantage when it comes to scaling performance effortlessly. Based on demand, these applications allow you to functionally expand without the burdens of managing servers, automatically adjusting resources to optimize performance. With this model, you can handle varying workloads more efficiently, ensuring that your application remains responsive even during peak times or significant traffic influxes.

Auto-Scaling Mechanisms in Action

Auto-scaling is built directly into serverless architectures, which means your application can automatically respond to changes in demand. When user activity spikes, additional instances of your functions are created dynamically and then terminated when demand decreases. This allows for resource allocation that’s both efficient and cost-effective, ensuring that you only pay for what you use while still providing reliable performance.

Handling Traffic Spikes: Real-World Scenarios

In real-world scenarios, serverless architectures excel during unexpected traffic spikes. For example, an e-commerce platform often experiences heavy traffic on Black Friday. With a serverless setup, the platform can seamlessly handle thousands of concurrent transactions without a hiccup, scaling up function executions to meet the demand without any prior planning or infrastructure adjustments.

During Black Friday, the true power of a serverless architecture can shine. Take, for instance, a major online retailer that faced an influx of shoppers around lunchtime. With a traditional setup, their servers might have struggled, causing downtime or slow response times. However, with serverless, the system dynamically adjusted, spinning up resources to accommodate the surge in traffic while keeping overheads low. This on-demand scaling capability allowed them to fulfill orders efficiently, resulting in higher sales and satisfied customers, demonstrating the potential of serverless to revolutionize performance during critical business events.

Architecting with Intention: Best Practices for Serverless Design

Designing a robust serverless architecture involves strategic thinking and intentional choices. By ensuring that your components are modular and independently deployable, you create a flexible system that can adapt to changes in traffic and business needs. Using an event-driven approach, combining functions with managed services, enables smooth scaling and resource utilization. Prioritize observability and logging to closely monitor performance and identify issues before they escalate. Overall, deliberate architecture will enhance scalability and maintainability, paving the way for successful serverless applications.

Designing Microservices for Optimal Efficiency

Microservices play a pivotal role in serverless design by allowing you to build applications as a collection of loosely coupled components. Each service can be developed, deployed, and scaled independently, which means you can optimize each microservice for its specific task. This separation not only enhances agility in development but also improves failure isolation; should one service falter, others can continue to operate smoothly. Emphasizing lightweight, stateless functions encourages faster response times and reduces operational overhead, making your architecture more efficient and reliable.

Security Considerations in Serverless Environments

Security in serverless environments must be proactively managed to protect your applications and data. Functions should operate with the principle of least privilege—each should only have access to the resources necessary for execution. Implementing a robust authentication and authorization mechanism is crucial, as is encrypting sensitive data both in transit and at rest. Regularly auditing your functions and monitoring for vulnerabilities ensures that you can address any potential threats quickly and effectively. Staying informed about emerging security best practices is key to maintaining a secure serverless architecture.

In addition to the above tactics, I find that integrating security during the development lifecycle, rather than treating it as an afterthought, brings substantial benefits. Utilizing tools that provide security scanning can identify vulnerabilities before code is deployed. I also recommend incorporating runtime security monitoring tools to review function behavior and detect anomalies in real time. Establishing thorough incident response plans helps prepare your team to react swiftly in case of a security breach, minimizing potential damage. Ultimately, adopting a proactive security posture ensures a safer serverless application environment.

Unpacking Limitations: When Serverless May Not Be the Solution

Despite the many advantages of serverless architecture, it’s crucial to recognize when it may not fit your project’s needs. Some scenarios can reveal limitations that could hinder development speed or escalate costs. Considerations such as application complexity, state management challenges, and vendor lock-in risks often raise concerns, making it imperative to evaluate your specific requirements before fully committing to a serverless model.

Application Complexity and State Management Challenges

In applications where state management is critical, serverless can become complicated. Stateless functions are often easier to scale, but once you introduce persistent states or complex workflows, tracking user sessions or handling transactions may lead to performance issues. For instance, relying solely on serverless for a real-time chat application could mean that managing message histories across multiple functions can disrupt user experiences if not architected carefully.

Vendor Lock-in Risks and Mitigation Strategies

Using serverless architecture can increase the risk of vendor lock-in, given that most services are tied to specific providers like AWS or Azure. Migrating applications away from these platforms can become cumbersome if developers heavily utilize proprietary features. However, you can mitigate this risk by implementing a service-oriented architecture that allows you to maintain some portability through standardized APIs or containerization techniques.

Exploring mitigation strategies for vendor lock-in is crucial for ensuring flexibility in your serverless application. Consider adopting multi-cloud strategies where you avoid significant dependencies on one provider. Leverage platforms like Kubernetes, which supports function-as-a-service (FaaS) that can help encapsulate your applications in containers. This fosters quagmire separation from any specific cloud provider, ensuring that you have options should you need to switch or diversify your infrastructure. A common practice is to keep core business logic portable while integrating cloud-native services for enhanced functionality, allowing you to reap the benefits of a serverless environment without being tied down.

To wrap up

On the whole, I’ve aimed to provide you with a comprehensive understanding of serverless architecture. By exploring its benefits, challenges, and the key components, I hope to empower you to make informed decisions about adopting this paradigm for your projects. As you probe deeper into serverless technologies, you’ll find them to be a flexible and efficient way to build and scale applications. With the right knowledge, you can harness the power of serverless to enhance your development experience and optimize your resource management.